We are excited to reveal the details of the liquid-cooled Dell PowerEdge XE9680L with NVIDIA B200 GPUs, simply, the densest rack scale architecture in the industry.

- The XE9680L delivers 8 GPUs in a 4U form factor.

- The XE9680L boasts standard 12 x PCIe 5.0 full-height, half-length slots, giving customers 20% more FHHL PCIe 5.0 density. This means 2x more capacity for high-speed I/O for North/South AI fabric, Dell PowerScale GPU Direct storage connectivity and seamless integration of accelerators.

- And, we’re improving energy efficiency by 2.5x with new direct to chip cooling technology.

The 4RU XE9680L is engineered with a rack-scale architecture, featuring pre-validated integration components such as liquid coolant, power distribution, and networking. This design provides a turn-key infrastructure solution for Dell AI Factory applications.

The XE9680L is slated for release in the second half of 2024. It will offer industry-leading PCIe expansion capacity and a variety of factory-integrated rack configurations, incorporating both Dell and third-party infrastructure components.

Dell Technologies is poised to leverage NVIDIA’s Blackwell B200 Platform

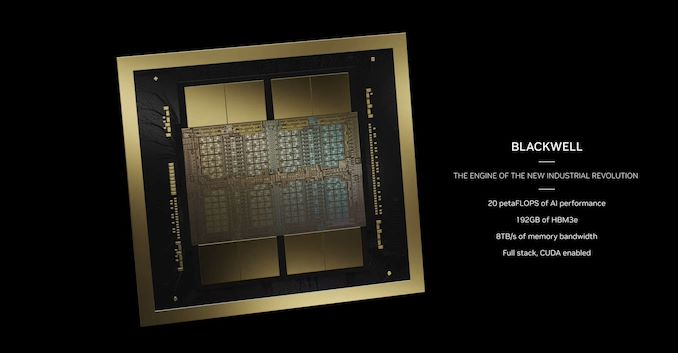

NVIDIA recently announced its next-generation AI platform, the NVIDIA Blackwell B200. This revolutionary platform is set to power a new era of computing, enabling organizations to build and run real-time generative AI on trillion-parameter large language models

Unprecedented Performance

The Blackwell B200 GPU more than doubles the transistor count of the existing H100, packing 208 billion transistors2. It provides a staggering 20 petaflops of AI performance from a single GPU2, a significant leap from the maximum 4 petaflops of AI compute offered by a single H1002.

The B200 GPU is equipped with 192GB of HBM3e memory, offering up to 8 TB/s of bandwidth. This powerhouse of AI performance delivers 3X the training performance and 15X the inference performance of previous generations.

Dual-Die Configuration

The B200 GPU is not a single GPU in the traditional sense. Instead, it’s comprised of two tightly coupled die, functioning as one unified CUDA GPU2. The two chips are linked via a 10 TB/s NV-HBI (NVIDIA High Bandwidth Interface) connection, ensuring they can properly function as a single fully coherent chip2.

The NVIDIA Blackwell B200 platform represents a significant advancement in AI technology. As companies around the world gear up to incorporate this technology into their offerings, we can expect to see a new wave of innovative, high-performance solutions hitting the market.

In Dell Technologies ongoing efforts to support customers with the complexities of deploying large-scale data centers, Dell Technologies is also unveiling new rack scale solutions. These solutions, compatible with both air and liquid cooling, are set to be the most compact and energy-efficient rack scale solutions on the market. They are designed to be compatible with any data center cooling methods, and come factory integrated, ready for deployment.

A 70KW design that uses air cooling with Rear Door Heat Exchangers (RDHx), supporting 64 GPUs – ideal for NVIDIA H100/H200/B100, and, a 100KW design that uses liquid cooling with RDHx, supporting 72 B200 GPUs – this is the most compact rack scale architecture in the industry.

The PowerEdge XE family of servers has been leading the way in providing the necessary computing power to utilize generative AI for customer applications. Dell Technologies have announced support for both the B100 and B200 models and have taken into account customer feedback about the need for top-tier performance while also improving cooling and power requirements as generative AI becomes more widespread within organizations. The XE9680L sets a new standard for performance and energy efficiency in a more compact, denser 4U form factor, complete with an HGX 8-way B200 GPU configuration. To close, here are some key features of this design:

- Rack-scale architecture backed by on-site deployment services and Dell’s trusted end-to-end AI solutions

- Speed up your enterprise Generative AI objectives with optional factory integration of pre-validated networking, power distribution, and cooling options

- Simplify infrastructure design and serviceability requirements with a suite of ready-to-use liquid coolant distribution solutions

- Make scaling your workflow easier with Dell’s holistic approach to solution design, sizing, testing, optimization, and performance tuning throughout your AI journey

- Dell helps you avoid interoperability issues and improve your time to value with a full rack solution and a growing suite of services for AI

Leave a comment