Category: AI/ML

-

PowerScale OneFS 9.9 – CoS & QoS Support Introduced

OneFS 9.9: Unleashing AI Performance with CoS/QoS Tagging

-

PowerScale OneFS 9.9 – Relentless Innovation

“Optimized thread groups, reduced lock contention, NVMe direct-write… these aren’t just buzzwords, they’re the building blocks of a storage revolution. OneFS 9.9 delivers a finely tuned engine that pushes the boundaries of performance, making it the ultimate fuel for your AI ambitions.” All of which we’ll unpack in this post.…

-

Introduction to NVIDIA Inference Microservice, aka NIM

At NVIDIA GTC 2024, the major release of the NVIDIA Inference Microservices, aka NIM was announced. NIM is part of the portfolio making up the Nvidia AI Enterprise stack. Why the focus on inferencing? Because when we look at use cases for Generative AI, the vast majority of them are…

-

Dell is making it easy to stand up Digital Assistants !

By choosing Dell’s generative AI digital assistant solutions, businesses can bypass the complexities and high costs associated with building AI systems from scratch. Instead, they can leverage Dell Technologies comprehensive, integrated, and scalable solutions to quickly realize the benefits of AI, drive innovation, and stay competitive in the digital age.

-

Announcing the Dell PowerEdge XE9680L – Purpose-built for GPU density with extreme AI performance

The XE9680L sets a new standard for performance and energy efficiency in a more compact, denser 4U form factor, complete with an HGX 8-way B200 GPU configuration.

-

PowerEdge Soundbytes Ep20: Dell RAG Demo Update

In this previous episode, I sat down with David O’Dell from the Dell Technical Marketing Engineering team to talk about RAG. David also walked me through his RAG demo. Since then, David has been super busy, making some improvements to the demo. Those improvements are so awesome that I had…

-

Converting HuggingFace LLM’s to TensorRT-LLM for use in the Triton Inference Server

Introduction Before getting into this blog proper, I want to take a minute to thank Fabricio Bronzati for his technical help on this topic. Over the last couple of years, HuggingFace has become the de-facto standard platform to store anything to do with generative AI. From models to datasets to…

-

If you work in AI, you should know about MLPerf: MLPerf v3.1 Training Benchmark Results

Learn about MLPerf and Explore the Latest MLPerf V3.1 Training Benchmarking Results: The artificial intelligence (AI) community is driven by innovation, and staying at the forefront requires the ability to benchmark and compare the performance of AI systems accurately. This is where MLCommons and MLPerf come into play, as they…

-

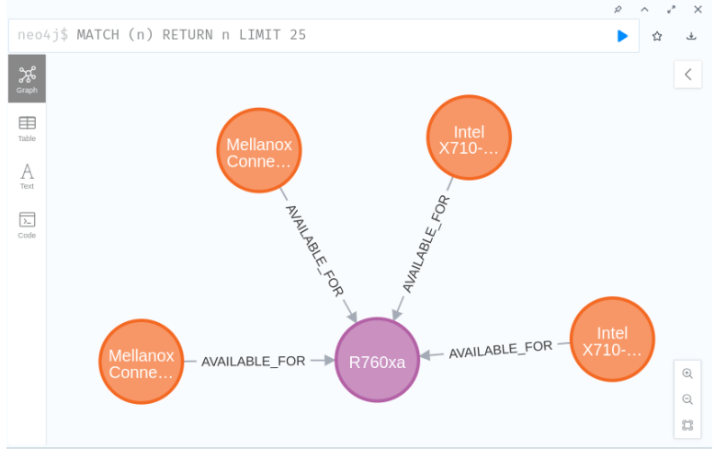

How to Ingest a PDF File into a Graph Database

In this video, I show the end-to-end process required to ingest a PDF file into a graph database using python. Lots of tutorials on graph databases omit some of the steps needed to ingest data into the database, especially the steps around the extraction and creation of entities and relationships.…

-

How to Ingest a PDF File into a Vector Database

In this video, I show the end-to-end process required to ingest a PDF file into a vector database using python. Vector databases are the most popular option as the information store for Retrieval Augmented Generation, aka RAG. This video is a companion video for my blog post on the differences…